Advice on reading search papers by Prof. Andrew Ng(CS230)

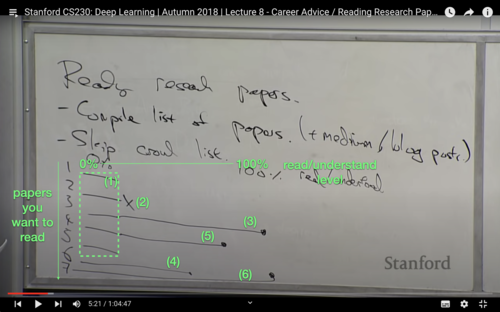

This post is a note-taking for the ‘Reading Research Papers’ part of the Stanford CS230 Deep Learning course lecture on YouTube URL. The class is all about advice on how to master a body of literature around some topics you want to learn. So I’ve written down his writings on the blackboard and his verbal comments.

- Compiled list of papers(+ medium/blog posts)

- Skip around list

- (1) 10% of each paper or try to quickly skim and understand each of these papers.

- (2) If based on that you decide that paper 2 is worthless, others say they sure got it wrong, or you read it, and it just doesn’t make sense. Then go ahead and forget it.

- (3) As you skip around to different papers, it might decide that paper 3 is a seminal one and then it will continuosly go ahead and read and understand the whole thing.

- (4) And based on that, you might then find the 6th paper from the citations and read that.

- (5) Go back and flesh out your understanding on paper 4.

- (6) And then find a paper 7 and go and read that all the way to the conclusion.

- Number of papers you should read

- 15-20 papers: good enough to do some work, and apply some algorithms.

- 50-100 papers: enough to give you a very good understanding of an area.

- Read 1 paper

- The bad way to read the paper is to go from the first word until the last word.

- Take multiple passes through the paper.

- Title/abstract/figures: the most

- Intro + Conclusions + Figures + Skim rest. (Skip/skim related work)

- Read but skip/skim math

- Whole thing, but skip parts that don’t make sense.

- Questions for having good understanding of the paper

- What did authors try to accomplish?

- What were the key elements of the approach?

- What can you use yourself?

- What other references do you want to follow?

- Sources of papers

- Twitter (@kiankatan, @AndrewYNg)

- ML subreddit

- NIPS/ICML/ICLR

- Friends

- Arxiv sanity

- (TMK) Papers with code (Web), Alpha zeta vector (YouTube)

- To more deeply understand the paper,

- Math

- Redrive from scratch

- Code

- Download/Run open-source code

- Reimplement from scratch

- Math

- Longer term advice

- Steady reading

- Not shorts and burst

I’m grateful to Andrew Ng for sharing his wise and practical advice. As ending this lecture, he said, ‘some of this I wish I had known when I was a first-year Ph.D. student, but c’est la vie.’ I agree, but I think I’m lucky to know this now. It’s never too late to start. So keep going, and happy reading!